Enterprise Data Recovery from Cloud-Based Storage Synchronization Failures: OneDrive, SharePoint, and Google Drive Corruption

Introduction

The promise of enterprise cloud platforms like Microsoft 365 (OneDrive/SharePoint) and Google Workspace (Google Drive) is flawless collaboration, accessible data, and automatic redundancy. Organizations trust these platforms implicitly, believing that data loss—the catastrophic kind that requires intervention—is a problem solved by the hyper-scalers.

However, a new and insidious form of data loss has emerged: the cloud sync failure.

This crisis is not caused by a physical hard drive crash, a fire, or a head assembly failure. It is a logical corruption stemming from the very mechanism designed to ensure continuity: the synchronization engine. When thousands of users, complex permissions, and intricate version control systems collide, the result is often a silent, systemic failure where the correct data version is overwritten, deleted, or rendered inaccessible by an errant sync command.

Recovering from these failures is exponentially more difficult than recovering a local drive. It demands a specialized form of enterprise cloud data forensics, requiring deep expertise in API-level log analysis, versioning schema reconstruction, and secure reconciliation of the truth between local endpoints and cloud storage.

The Hidden Threat: Three Modes of Cloud Synchronization Failure

For IT administrators and compliance officers, the most dangerous cloud failures are the ones that happen quietly, without triggering an immediate outage alarm. These failures are primarily rooted in conflicts between local desktop agents and the centralized cloud service.

1. The Versioning Conflict Catastrophe

In a collaborative cloud environment, users are often working on the same file simultaneously. The cloud platform uses version control to manage these conflicts.

-

How it Fails: A common scenario involves two users editing a document offline. When both users reconnect, the sync engine attempts to merge the changes. If the conflict resolution logic is flawed, or if one user’s sync agent times out, the system may default to declaring one user’s version as the authoritative copy and silently overwrite the other user’s changes, effectively deleting hours or days of work. Worse, in complex structures like SharePoint document libraries, a metadata conflict (such as a list column change) can sometimes cause the entire document to be reverted to an ancient, incorrect state or become permanently inaccessible. Microsoft provides extensive documentation on managing versioning in SharePoint Online but even minor misconfigurations can lead to significant data loss during high-volume syncs.

2. The Silent Overwrite and Phantom Deletion

This is the most frustrating type of cloud sync failure because it appears as if the file simply vanished.

-

The Sync Agent Error: A local synchronization agent (OneDrive desktop client or Google Drive File Stream) may encounter a corrupt local file index. In response, instead of uploading the correct data, the agent mistakenly interprets the “corrupted” state as a signal to sync the local deletion or an empty file state back to the cloud. The cloud service, trusting the local agent, executes the command, overwriting the perfect cloud version with an empty or deleted status.

-

The Synchronization Backwash: If the affected file is a critical repository (like a large database file or a financial spreadsheet), this silent overwrite propagates across the organization’s endpoints. All users see the corrupted file, and because the cloud service performed the “deletion” or “overwrite,” it may fall outside standard 30-day trash/recycle bin retention policies.

3. Policy and Retention Conflict Trap

Enterprise environments enforce strict retention policies for compliance (e.g., keeping emails for seven years). When data moves, these policies can collide.

-

Cross-Platform Loss: Data often flows between different cloud containers (e.g., a file moves from a user’s OneDrive to a SharePoint Team Site, or from a user’s personal Google Drive to a Shared Drive). If the destination container has a more aggressive deletion or retention policy than the source, the sync operation may delete the correct version from the source before the destination successfully ingests it, or it may trigger a policy-based deletion on the new location that the administrator didn’t intend.

-

Archiving Errors: When retiring an employee, an administrator might migrate the user’s files to an archive storage class. If the migration script or API call fails to correctly flag the files as “retained,” the cloud provider’s garbage collection may sweep the files away, mistakenly treating them as standard trash after the user account is deactivated.

The Forensic Challenge: Why Native Tools Fail

When a file is lost due to a synchronization error, the first instinct is to check the recycle bin or the version history. However, in major logical corruption incidents, these native tools fail because they only show the end result, not the root cause.

-

Limited API Log Depth: While cloud providers offer API logging (often for security monitoring), these logs are complex, immense, and often lack the granular file-level detail needed for forensic reconstruction. They might confirm that a file was “modified” by a specific user at a specific time, but they won’t reveal which version was uploaded or why the sync agent decided to overwrite the existing file.

-

Version History Limits: The native version control feature often has practical or configured limits (e.g., retaining only the last 50 versions or retaining versions for 90 days). If the cloud sync failure and subsequent overwrites occurred over a long period, the last correct version may have rolled off the retention window, making it permanently inaccessible through the user interface.

-

No Deep Snapshot Access: Cloud providers typically do not offer user-accessible, point-in-time snapshots of the entire storage environment. Unlike a local drive where a forensic image captures every single sector, the file-level view provided by the cloud is the only access available, preventing deep file system analysis.

The Forensic Protocol: Reconstructing the Digital Truth

Recovering data from cloud sync failure demands a specialist approach that operates at the API and database level, not the file level.

Step 1: Deep API-Level Log Analysis

The first step is to treat the cloud platform’s audit logs as the primary crime scene.

-

Extraction and Normalization: Forensic specialists extract all relevant API logs and audit trails (e.g., Microsoft’s Unified Audit Log or Google’s Admin Audit Log) for the specific time frame of the data loss. These logs, which are massive and unnormalized, are fed into specialized tools.

-

Timeline Reconstruction: The software reconstructs a precise, granular timeline of every single operation on the affected file: when it was read, when it was modified, which device performed the operation, and, most critically, the exact sync commands that led to the deletion or overwrite. This analysis can often pinpoint the single faulty sync agent or script responsible for the catastrophe. For IT security professionals, understanding the depth of these logs is crucial; resources like Microsoft’s documentation on the Unified Audit Log provide essential insights.

Step 2: Version Chain Repair and Bypass

Once the timeline confirms the last correct version, the focus shifts to retrieving it.

-

Hidden Version Access: Even if the latest correct version has fallen outside the user-facing version control limit, the cloud provider’s underlying storage mechanism may still retain the physical data block for a longer period. Forensic methods involve using specific, deep-level API calls to bypass the user interface restrictions and attempt to access data blocks that are technically unindexed but still physically present in cold storage caches.

-

Restoring the Link: If the correct file is located, the forensic team must execute a precise API command to reinsert the correct version back into the file’s version control chain, making it the new authoritative copy and triggering a sync that corrects the error across all endpoints.

Step 3: Endpoint Reconciliation and Policy Review

The final step is ensuring the recovered data is clean and secured against future failure.

-

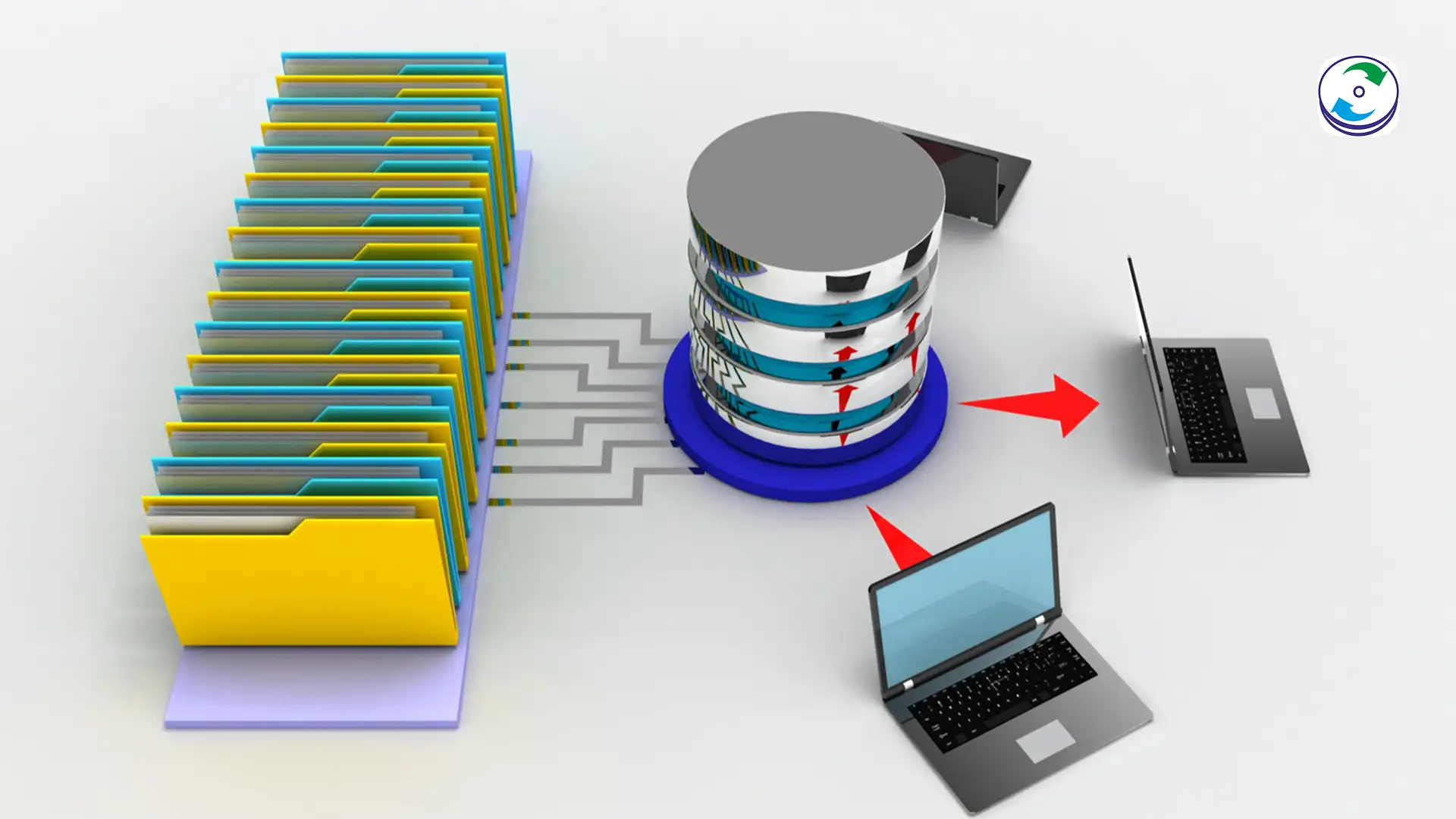

Endpoint Reconciliation: The recovered cloud file is compared against any surviving local copies on user endpoints. This reconciliation step confirms data integrity and ensures that the corrected file synchronizes properly to all local machines.

-

Policy Hardening: The forensic investigation often reveals the root cause to be a flaw in the organizational security or retention policies. The specialist provides a detailed report recommending adjustments to version control limits, sync agent configurations, and archival procedures to prevent similar future logical corruption events.

Conclusion: Data Loss in a Serverless World

The shift to the cloud has transformed data loss from a hardware problem to a logical problem. The cloud sync failure is a persistent, growing threat because it stems from the complex interplay between synchronization agents, user behavior, and immense, constantly changing data policies.

When critical corporate documents are lost due to a OneDrive corruption, a SharePoint versioning loss, or a Google Drive synchronization error, the standard recovery options are often exhausted within minutes. The only path forward requires specialized enterprise cloud data forensics to analyze the API logs, bypass the failed version control mechanisms, and reconstruct the correct data timeline.

If your organization has suffered a widespread data loss event in its collaborative cloud platform, do not attempt to brute-force a re-sync or rely on generic software. You risk propagating the corruption further. Contact DataCare Labs immediately to deploy our specialized protocol for secure, API-level data reconciliation and recovery.